Serverless ML Inference: Cost-Effective Options & Cloud Comparison

Table of Contents

Introduction

Are you wondering which cloud providers offer serverless containers with autoscaling for ML inference, or what are the most cost-effective serverless options for machine learning in the cloud? In 2025, serverless ML inference has become a popular solution for businesses and developers seeking scalable, cost-efficient deployment. This guide breaks down AWS, Google Cloud, and Azure serverless solutions, including GPU support, autoscaling, cold start mitigation, and LLM hosting, so you can make an informed decision.

Understanding Serverless ML Inference

Serverless ML inference allows you to run machine learning models without managing servers. Resources automatically scale based on demand, and you pay only for actual compute usage. Serverless is ideal for:

- Bursty or unpredictable workloads

- Event-driven ML tasks

- Small to medium CPU-based models

- Rapid prototyping and proof-of-concept

Key benefits include operational simplicity, automatic scaling, and cost savings on idle resources.

Which Cloud Providers Offer Serverless Containers with Autoscaling for ML Inference?

| Cloud Provider | Serverless Option | Autoscaling | GPU Support | Pricing Model |

|---|---|---|---|---|

| AWS | SageMaker Serverless Inference, Lambda + EKS Fargate | Yes | Yes | Per-ms / per-invocation |

| Google Cloud | Cloud Run, Vertex AI Predictions | Yes | Yes | Per-100ms CPU+Mem |

| Azure | Azure Functions, Azure Container Apps | Yes | Limited | Per-invocation |

These platforms automatically scale to zero when idle and handle infrastructure management, simplifying ML deployment.

The Most Cost-Effective Serverless Options for ML Inference

Cost-effectiveness depends on workload type:

- CPU-based models: AWS SageMaker Serverless, Google Cloud Run, Azure Container Apps

- GPU-accelerated models: AWS Lambda with GPU, GCP Vertex AI with custom GPU scaling

- Dockerized ML models: AWS ECS/Fargate, Google Cloud Run, Azure Container Instances

Serverless is generally cheaper for sporadic, bursty workloads. Dedicated containers often win for steady high-throughput inference.

Serverless ML vs Dedicated Containers for LLM Hosting

| Feature | Serverless | Dedicated Containers |

|---|---|---|

| Management | Low | High |

| Auto-Scaling | Yes | Limited (manual/auto-scaling setup required) |

| Cold Start Latency | Medium-High | Low |

| Cost | Pay-per-use, cheaper for bursty workloads | Fixed cost, cheaper for consistent heavy workloads |

| GPU Support | Limited & per-use | Full control, optimized for performance |

Scenario Examples:

- Startups with bursty traffic: Serverless is cheaper and scales automatically.

- Medium-sized e-commerce with predictable peaks: Serverless with provisioned concurrency balances cost and latency.

- High-consistency, low-latency enterprise workloads: Dedicated GPU instances are more cost-effective.

- Large LLM inference: Pay-per-token APIs or serverless GPU platforms for variable workloads; dedicated GPU clusters for constant high-volume inference.

Key Cost Drivers for Serverless ML Inference

- Compute Duration (Invocation Time): Charged per ms. Longer inference = higher cost.

- Memory/vCPU Allocation: More memory and CPU = higher per-invocation cost.

- Number of Invocations: Frequent requests increase total cost.

- Cold Starts: Extra initialization time increases billed duration.

- Data Transfer (Egress): Moving large inputs/outputs incurs costs.

- GPU vs CPU: GPU inference is faster but more expensive per unit time.

Mitigation strategies:

- Keep containers warm with periodic pings

- Optimize container image size

- Use lighter model versions

- Provision concurrency where needed

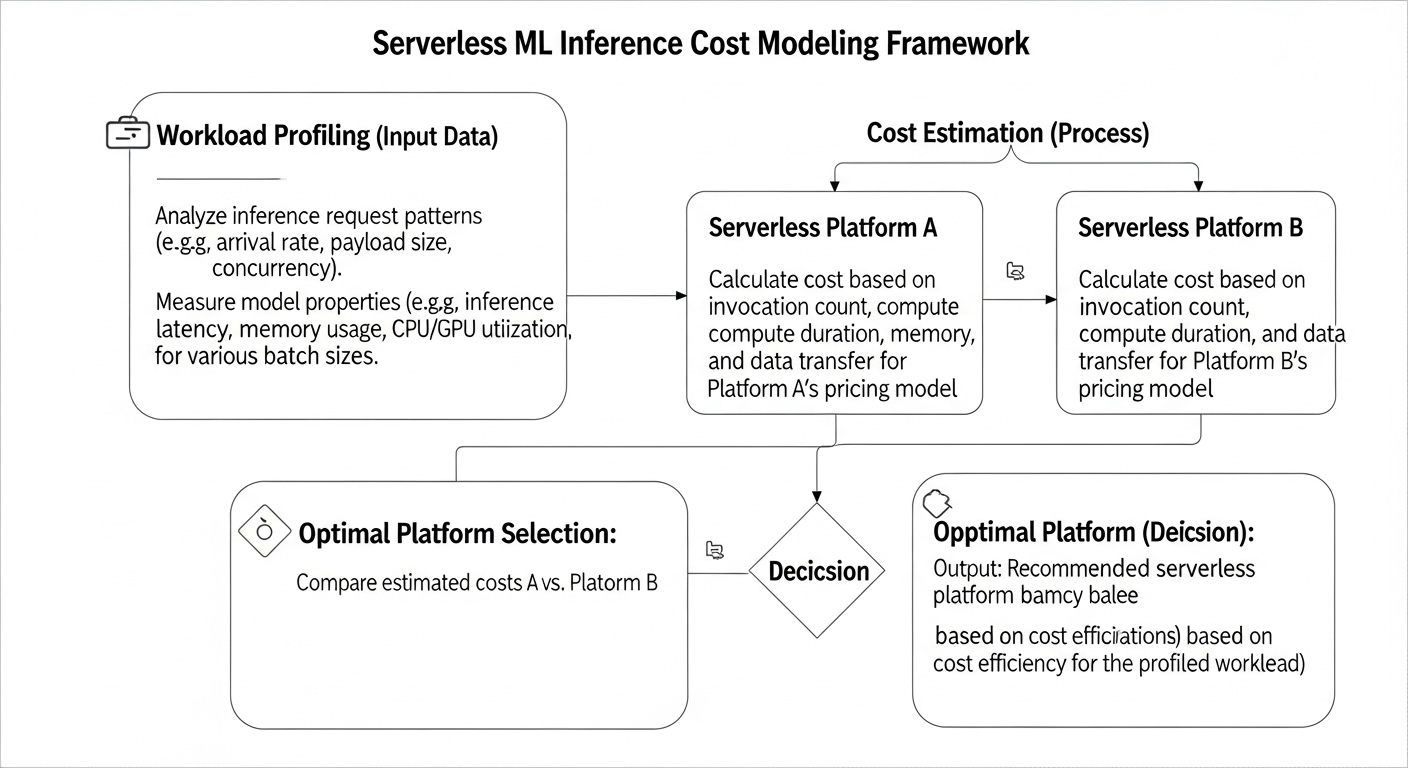

Practical Decision Framework for Serverless ML

- Assess Workload: Bursty vs constant traffic, latency tolerance.

- Characterize Model: Size, complexity, CPU/GPU needs.

- Estimate Costs: Include compute, memory, invocations, and data transfer.

- Include Operational Overhead: Server management, monitoring, scaling.

- Compare Scenarios: Serverless vs dedicated; evaluate break-even points.

- Start Small and Iterate: Begin with serverless, scale or migrate as usage grows.

Cold Start Considerations

Cold starts affect latency and cost, especially for large ML models or GPU inference:

- Extra time for initializing functions and loading models

- Mitigated with provisioned concurrency, container optimization, and pre-warming strategies

- Crucial for latency-sensitive applications like real-time LLM inference

Serverless ML Inference FAQs

1. What is serverless inference?

Serverless inference allows ML models to run in the cloud without managing servers. Autoscaling adjusts resources automatically, and you pay only for compute usage.

2. Which cloud providers offer serverless containers with autoscaling for ML inference?

- AWS: SageMaker Serverless, Lambda + EKS Fargate

- Google Cloud: Cloud Run, Vertex AI Predictions

- Azure: Azure Functions, Azure Container Apps

3. What are the most cost-effective serverless options for ML inference?

- CPU-based: AWS SageMaker Serverless, Google Cloud Run, Azure Container Apps

- GPU-based: AWS Lambda with GPU, GCP Vertex AI with GPU scaling

4. How do serverless ML inference costs compare to dedicated containers?

- Serverless: Cheaper for bursty, unpredictable workloads

- Dedicated containers: Cheaper for steady, high-volume inference

5. How to reduce cold start times?

- Use pre-warmed instances

- Optimize container images and dependencies

- Periodically ping functions

6. How to choose a serverless platform for LLM inference?

- Check GPU availability

- Evaluate auto-scaling efficiency

- Analyze cold start latency and pricing

- Ensure integration with your CI/CD pipelines

Conclusion

Serverless ML inference in 2025 offers scalable, operationally efficient, and cost-effective options for ML deployment. By carefully evaluating traffic patterns, model size, GPU needs, and cold start mitigation strategies, you can decide when serverless is the best choice and when dedicated containers or GPU clusters make more financial sense.

Using the frameworks, tables, and real-world scenarios in this guide, your ML deployment strategy can now be data-driven, cost-optimized, and fully aligned with your business needs.

[…] For instance, a simple classification or rephrasing task might go to a smaller, faster model like Gemini 2.5 Flash-Lite, while complex reasoning or creative generation is reserved for a more advanced model. This approach can lead to significant savings. If you’re managing various AI tools for personal productivity, you’ll appreciate the granular control this offers over costs. You can learn more about optimizing infrastructure costs in general by looking into strategies for serverless ML inference costs. […]