Unleash Creativity: The Ultimate Guide to Open-Source AI Art Generators for Commercial Use (Licensing & Pro Tips)

In today’s rapidly evolving digital landscape, AI art generators have become powerful tools for creatives, businesses, and hobbyists alike. While proprietary platforms offer convenience, the world of open-source AI art generators for commercial use presents unparalleled flexibility, control, and often, cost-effectiveness. But delving into open-source for commercial endeavors comes with a critical caveat: understanding the intricate world of licensing and copyright.

This comprehensive guide will walk you through the top open-source options, demystify their licensing models, and provide invaluable tips to ensure your AI-generated art is not only stunning but also legally compliant for commercial application.

Key Takeaways:

- Stable Diffusion Dominates: The Stable Diffusion ecosystem is the leading open-source choice for commercial AI art, offering robust models and versatile interfaces.

- Licensing is Layered: Always check the specific licenses for both the core AI model (e.g., Stable Diffusion) and any third-party components (e.g., fine-tuned models, upscalers, UI elements) you use. Not all open-source means ‘free for all commercial use.’

- Copyright is Complex: Purely AI-generated art may not be copyrightable in some jurisdictions (like the US) without significant human creative input. However, this doesn’t prevent commercial sale, but it impacts your ability to prevent others from copying it.

- Due Diligence is Essential: Maintain meticulous records of your generation process, verify licenses for every asset, and avoid prompting for copyrighted material.

- Iterate and Transform: Actively engage with the AI output through editing and refining to add human creativity and potentially strengthen your claim to authorship.

Why Choose Open-Source for Commercial AI Art?

The allure of open-source AI art generators for businesses stems from several compelling advantages over their closed-source counterparts:

- Unmatched Customization: Open-source tools allow you to tweak, modify, and fine-tune models to suit highly specific artistic styles or brand needs. This level of control is rarely available with proprietary services.

- Cost Efficiency: While powerful hardware may be required for local operation, eliminating recurring subscription fees can lead to significant long-term savings for high-volume commercial users.

- Transparency: With open-source code, you can examine how the models work, understand potential biases, and verify data handling practices, fostering greater trust.

- Community Support: Vibrant communities often grow around open-source projects, offering extensive support, tutorials, and a constant stream of innovative add-ons and models.

- Future-Proofing: You’re not locked into a single vendor’s ecosystem, providing more stability and adaptability as the AI landscape evolves.

Understanding AI Art Licensing: A Commercial Imperative

Navigating the legal landscape of AI-generated art is perhaps the most critical challenge for commercial users. Unlike traditional software, AI art involves several layers of intellectual property, and what’s permissible can be confusing. Many users on platforms like Reddit and Quora frequently express confusion over whether they can truly sell images generated by AI.

The Core Distinction: Model Licenses vs. Software Licenses

When using open-source AI art generators, you’re interacting with two primary types of licenses:

- The Underlying AI Model License: This governs the generative AI model itself (e.g., Stable Diffusion). This is paramount for commercial use.

- The Interface/Software License: This governs the application or interface (e.g., Automatic1111, ComfyUI) that allows you to interact with the model.

A key player in the open-source AI art space is Stability AI, the developer behind Stable Diffusion. Their models, such as Stable Diffusion 1.5 and Stable Diffusion XL (SDXL) 1.0, are generally released under permissive licenses like the CreativeML Open RAIL++-M License or the Stability AI Community License, which explicitly permit commercial use. However, it’s crucial to note that Stability AI has introduced paid commercial tiers for some of its newer, more advanced models (e.g., SDXL Turbo, Stable Diffusion 3.5) for enterprises exceeding a certain revenue threshold.

Creative Commons & Community Models: Proceed with Caution

Many fine-tuned models, LoRAs (Low-Rank Adaptation), and other assets found on community platforms like Hugging Face or Civitai often come with their own licenses, frequently Creative Commons licenses. Be extremely wary of licenses like “CC BY-NC-SA” (Creative Commons Attribution-NonCommercial-ShareAlike), as the “NonCommercial” clause strictly prohibits commercial use. Always scrutinize the specific license for *each* model or component you integrate into your workflow.

The Evolving Landscape of AI Art Copyright

A major point of discussion in online communities is the copyrightability of AI-generated art. In jurisdictions like the United States, the current stance is that purely AI-generated art, without significant human creative input, is generally not eligible for copyright protection. This doesn’t necessarily prevent you from selling or using the images commercially, but it means you might not be able to legally prevent others from copying or using your AI-generated output.

However, if you significantly modify, arrange, or combine AI-generated elements with your own human creative contributions, those human contributions (and potentially the resulting combined work) *can* be copyrightable.

Top Open-Source AI Art Generators for Commercial Use

When it comes to open-source AI art generators viable for commercial use, the ecosystem around Stable Diffusion stands out as the most mature and versatile. These are not just single applications but often a combination of powerful models and user-friendly interfaces.

The Stable Diffusion Ecosystem: Your Commercial Hub

Stable Diffusion, developed by Stability AI, is a highly regarded open-source latent diffusion model capable of generating photorealistic images from text and image prompts. Its models are generally released under licenses that permit commercial use, making it a cornerstone for businesses.

To use Stable Diffusion effectively, you’ll typically interact with it through various open-source user interfaces (UIs) or frameworks:

1. Automatic1111 Stable Diffusion WebUI

- What it is: A highly popular, feature-rich web-based UI for Stable Diffusion, offering extensive customization, control, and a vast array of extensions. It’s often the go-to for enthusiasts and professionals alike.

- Commercial Use: The Automatic1111 WebUI itself is open-source (under the AGPLv3 license), which generally allows commercial use of the software. However, like all interfaces, the commercial viability of your output depends on the licenses of the *specific Stable Diffusion models* and any custom models/LoRAs you load into it. Always verify the license of each model you download.

- Pro Tip: Leverage its extensive scripting capabilities and extensions (like ControlNet) to achieve precise control over your image generation, making it easier to meet commercial project specifications and add unique human creative input.

2. ComfyUI

- What it is: A powerful and flexible node-based UI for Stable Diffusion, known for its modularity and efficiency. It provides a visual workflow builder that appeals to users who want fine-grained control over every step of the image generation process.

- Commercial Use: ComfyUI is open-source under the GPLv3 license, which permits commercial use of the software. Similar to Automatic1111, the commercial rights for the generated images hinge entirely on the licenses of the specific Stable Diffusion models and third-party nodes/components you integrate. Many commercial production environments are already using ComfyUI.

- Pro Tip: ComfyUI’s graph-based workflow is ideal for creating reproducible and complex pipelines for commercial projects, ensuring consistency across a series of images or variations.

3. InvokeAI

- What it is: An open-source creative engine also built on Stable Diffusion models, designed for both professionals and enthusiasts. InvokeAI offers a robust web-based UI and has been adopted by professional studios.

- Commercial Use: InvokeAI’s Community Edition is free and open-source, allowing local installation and commercial use. Its commercial products (cloud-based tiers) also permit commercial use of generated images, and importantly, claim 100% user ownership of generated assets. It explicitly states that “every part of our technology stack permits commercial use.”

- Pro Tip: InvokeAI focuses on a streamlined workflow for creative production, making it a strong contender for teams seeking a professional-grade open-source solution with clear commercial permissions.

Other Notable Open-Source Contenders

4. Flux.1

- What it is: A newer open-source model family introduced by Black Forest Labs, aiming to set new benchmarks in image detail and prompt adherence. Flux.1 Schnell is gaining traction for its speed and quality.

- Commercial Use: Flux.1 Schnell is released under a permissive Apache 2.0 license, making it suitable for commercial use. The larger Flux.1 model is open for non-commercial use, so always double-check the specific variant’s license.

- Pro Tip: As a rapidly developing model, Flux.1 offers cutting-edge capabilities. Integrate it into your existing open-source UI (like ComfyUI) to experiment with its advanced features for high-quality commercial outputs.

Essential Tips for Commercial AI Art Creation

Beyond choosing the right generator, strategic practices are vital for successful and compliant commercial use of AI art.

1. Always Verify Licenses Rigorously

This cannot be stressed enough. For every model, LoRA, embedding, or ControlNet you download from community repositories, find and read its specific license. A model’s output license might differ from the software’s license. Assume non-commercial use unless explicitly stated otherwise. If in doubt, avoid commercial use or seek clarification directly from the creator.

2. Maintain Meticulous Records

Keep a detailed log of every component used to generate your commercial art: the specific AI model version, any LoRAs or embeddings, the UI used, and most importantly, the date and the specific license associated with each component at the time of creation. This documentation is crucial for legal due diligence.

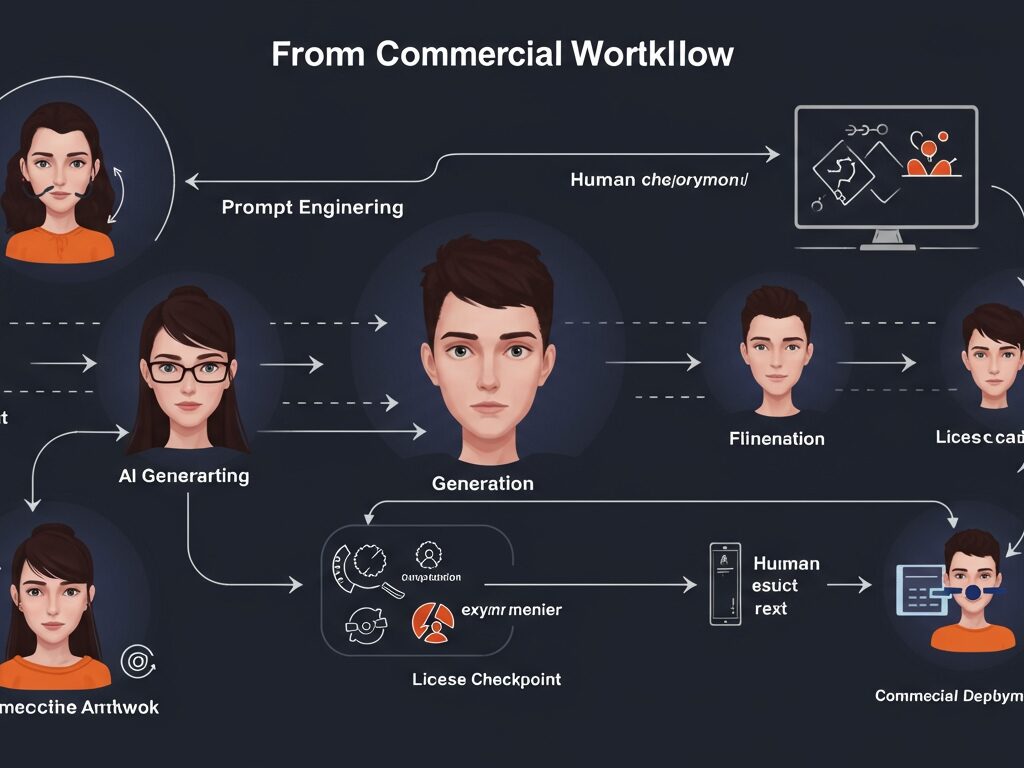

3. Leverage Prompt Engineering as a Creative Skill

Your prompts are your primary creative input. Invest time in learning advanced prompt engineering techniques to guide the AI towards unique and desired outputs. The more specific and iterative your prompting, the stronger your claim to human authorship over the final image, which could be beneficial for copyright considerations.

4. Embrace Iteration and Transformation

Don’t just use the first image the AI generates. Treat AI as a creative partner. Iterate on prompts, refine outputs through inpainting or outpainting, combine multiple AI-generated elements, or significantly alter images with traditional editing software. This active human intervention adds value and creative expression, which can bolster your claim of authorship.

5. Avoid Copyrighted Reference Material

While AI models are trained on vast datasets, including copyrighted imagery, directly prompting for copyrighted characters, styles of specific living artists without permission, or trademarked logos can lead to infringement issues. Focus on generating original concepts to minimize legal risk. This is a frequently raised concern on platforms like Quora and Reddit.

6. Consider Transparency (Where Applicable)

Depending on your industry and client expectations, being transparent about the use of AI in your commercial work can build trust. While not always legally required for commercial use, it’s an ethical consideration, particularly when working with clients who value originality or want to understand your process.

Beyond Generation: Editing & Upscaling for Commercial Quality

Generating an image is often just the first step for commercial use. To meet professional standards, you’ll likely need to refine and enhance the AI’s output:

- Upscaling: AI-generated images, especially from older models, might not be at print-ready resolutions. Use dedicated AI upscalers (many of which are open-source or have open-source components, like Real-ESRGAN or SwinIR) or traditional image editing software to boost resolution without losing detail.

- Post-Processing: Tools like Adobe Photoshop or GIMP (open-source) are essential for color correction, compositional adjustments, adding textures, fixing minor AI artifacts (like distorted hands), and integrating AI elements into larger designs. This is where significant human creative input often occurs.

- Vectorization: For logos or illustrations that need to scale infinitely, consider vectorizing your AI-generated raster images using software like Adobe Illustrator or Inkscape (open-source).

Frequently Asked Questions About Open-Source AI Art Generators for Commercial Use

Q1: Can I really sell AI-generated art if it’s not copyrightable?

Yes, you can typically sell or use AI-generated art commercially even if it’s not copyrightable in your jurisdiction. “Not copyrightable” means you cannot register a copyright for it and might not have exclusive rights to prevent others from copying or using it. However, it doesn’t automatically restrict your ability to sell or license it for commercial purposes, provided you adhere to the underlying model’s license and don’t infringe on existing third-party copyrights or trademarks (e.g., character likenesses).

Q2: Do I need to attribute the AI generator or model when using images commercially?

It depends on the specific license of the AI model or platform you used. Some licenses (like certain Creative Commons licenses, e.g., CC BY) require attribution, while others (like the Stability AI Community License for SDXL 1.0) do not. It’s always best practice to check the terms. If you’re using a blend of components, each might have its own attribution requirements. When in doubt, providing clear attribution (e.g., “Generated with Stable Diffusion via Automatic1111”) is a safe and ethical approach.

Q3: What if the AI model was trained on copyrighted images? Does that make my output infringing?

This is a complex and actively litigated area of law. The act of training an AI model on copyrighted data is a subject of ongoing legal debate, with some courts indicating it might constitute fair use. However, the *output* of the AI model could still be considered infringing if it substantially resembles a specific copyrighted work. To mitigate this risk for commercial use, focus on generating original concepts and significantly transforming AI outputs through your own creative input.

Q4: Do I need a powerful computer to run open-source AI art generators for commercial use?

For optimal performance, especially for higher resolution images or faster generation, a dedicated GPU with ample VRAM (typically 8GB or more, with 12GB+ being ideal for SDXL) is highly recommended. While some open-source UIs can run on less powerful hardware, it will be slower. Alternatively, you can use cloud-based services that offer access to these open-source models without needing local high-end hardware.

Q5: Can I modify an open-source AI art generator’s code for my commercial product?

Yes, that’s often the very purpose of “open-source”! Most open-source licenses (like MIT, Apache 2.0, or even GPLv3 for the software itself) permit modification and distribution for commercial purposes, provided you adhere to the license’s terms (e.g., retaining copyright notices, disclosing changes if required by the license). Always consult the specific license for the software/codebase you wish to modify. For example, InvokeAI is built to serve as a foundation for commercial products.

Conclusion

The landscape of open-source AI art generators offers immense potential for commercial innovation, empowering businesses and creators to produce high-quality visual content with unprecedented flexibility. While the allure of ‘free’ and ‘open’ is strong, successful commercial application hinges on a diligent understanding of licensing agreements, copyright complexities, and best practices for creative intervention. By carefully navigating these waters and embracing the power of tools like the Stable Diffusion ecosystem, you can unleash a new era of visual creativity for your commercial ventures, creating truly unique and impactful artwork for your brand or clients.