Top AI Tools Revolutionizing Creative Industries in 2025

The AI Revolution: Self-Learning Models, GPT-5, and the Global Infrastructure Race

The landscape of technology is undergoing an unprecedented transformation. Artificial intelligence, once a realm of science fiction, is now reshaping industries and daily lives at an astonishing pace. This revolution is driven by remarkable advancements in self-learning models, the continuous evolution of large language models like GPT-5, and an intense global race to build the underlying AI infrastructure.

Key Takeaways:

- Self-learning AI, powered by reinforcement and unsupervised learning, enables systems to adapt and improve autonomously without constant human intervention.

- OpenAI’s GPT-5, officially released on August 7, 2025, represents a significant leap in multimodal capabilities, reasoning, and real-time task execution.

- The global AI infrastructure race involves massive investments in data centers, GPUs, and sustainable energy, with the US, China, and major tech companies leading the charge.

- This rapid AI expansion presents critical ethical challenges, including data privacy, algorithmic bias, and significant environmental impact due to soaring energy consumption.

The Dawn of Self-Learning AI Models

Artificial intelligence has progressed far beyond rule-based programming. We are now entering an era dominated by self-learning models. These sophisticated systems can refine their own algorithms and behaviors through continuous interaction with data and their environments. They learn from both successes and failures, reducing the need for constant human oversight.

Key technologies enabling this include:

- Reinforcement Learning (RL): This approach allows AI agents to learn optimal behavior through trial and error. They receive feedback in the form of rewards or penalties from their environment.

- Online Learning: Models update incrementally as new data arrives. This facilitates continuous adaptation without requiring a complete retraining process.

- Unsupervised and Semi-Supervised Learning: These models uncover patterns and structures within raw data. They do this without the need for extensive human labeling.

Recent breakthroughs highlight this shift. Meta’s latest AI systems are reportedly showing signs of self-improvement without direct human intervention. This development is seen as a crucial step towards achieving artificial superintelligence. Similarly, Sakana AI’s Transformer-squared model demonstrates real-time self-learning. It adapts instantly to new tasks without retraining or additional data. These advancements promise increased efficiency and scalability. They also allow AI to function effectively in dynamic, new domains.

The Anticipation of GPT-5 (and Beyond)

Large Language Models (LLMs) have fundamentally changed how we interact with AI. OpenAI’s GPT series stands at the forefront of this evolution. Following GPT-4o and other interim models, OpenAI officially released GPT-5 on August 7, 2025. This highly anticipated model unifies advanced reasoning and multimodal capabilities into a single system.

GPT-5 marks a significant leap in intelligence. It boasts fewer hallucinations compared to prior models, with responses being 45% less likely to contain factual errors with web search enabled. Its enhanced capabilities span multiple areas:

- Multimodal Integration: GPT-5 seamlessly processes text, images, audio, and video. This enables applications like real-time video analysis and sophisticated image-to-text-to-action workflows.

- Advanced Reasoning and Logic: The model demonstrates more robust reasoning, improving reliability in critical applications. It is designed for complex, multi-step workflows.

- Coding and Task Execution: GPT-5 is OpenAI’s best coding model to date. It offers improvements in complex front-end generation and debugging. It also integrates “agentic” reasoning, enabling autonomous performance of multi-step tasks.

- Personalization: Users can select different personalities for GPT-5, allowing for a customized conversational tone and style.

The release of GPT-5 intensifies the competition among AI developers. Companies are pouring billions into research and development to keep pace. The future of LLMs points towards even greater specialization, efficiency, and responsible development.

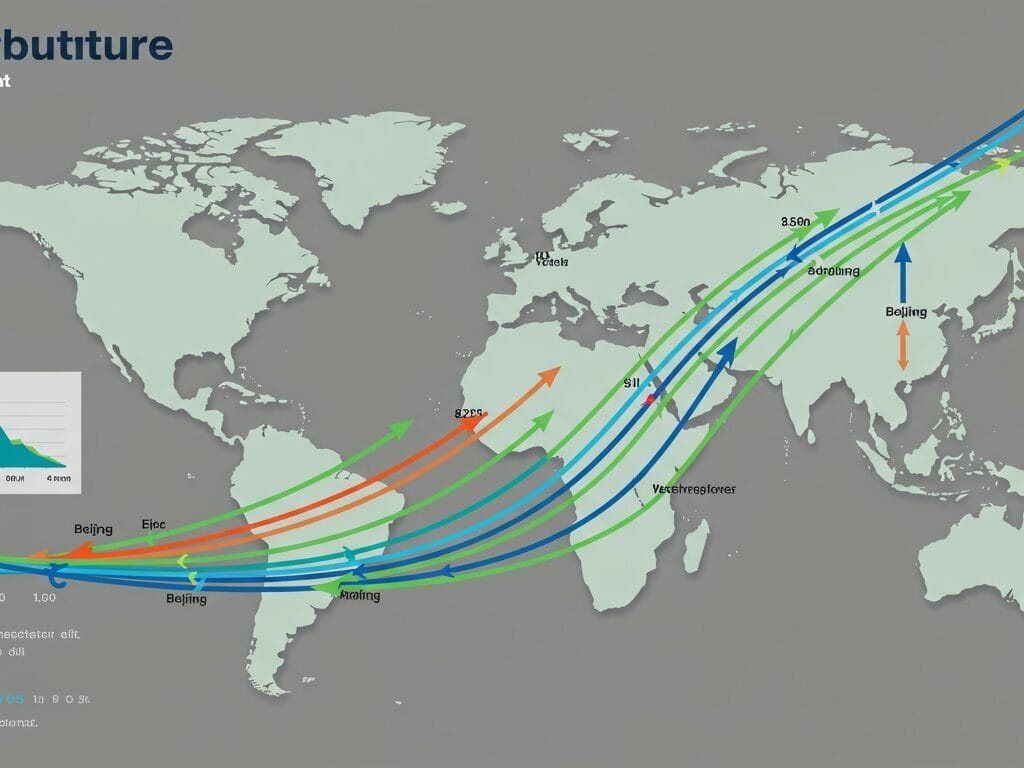

The Global AI Infrastructure Race

The rapid expansion of AI necessitates a massive underlying infrastructure. This includes powerful hardware and extensive data center networks. The demand for compute power, especially Graphics Processing Units (GPUs), is insatiable.

This has sparked an intense global competition, often termed an “AI cold war,” between nations and tech giants.

Key Players and Investments

Major tech companies are making staggering investments to build out this infrastructure:

- Nvidia: A dominant player, its GPUs and CUDA platform are crucial for data center AI chips.

- Cloud Providers: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud are leading the charge. They offer scalable machine learning services and massive data center footprints. Google, for instance, pledged a $9 billion investment to expand its U.S. data center footprint for AI and cloud services.

- Semiconductor Manufacturers: Companies like AMD, SK Hynix, Samsung, and Taiwan Semiconductor Manufacturing Company (TSMC) are vital. They produce the advanced chips required for AI workloads.

- Other Innovators: IBM, Intel, Meta, Cisco, Arista Networks, and Broadcom are also key players. They contribute to various aspects of AI infrastructure, from specialized hardware to networking.

Overall, the global AI data center market is projected to reach USD 933.76 billion by 2030. This growth is driven by the rising demand for high-performance computing across sectors like healthcare, finance, and manufacturing. Some analyses suggest a $5.2 trillion investment into data centers will be needed by 2030 to meet AI-related demand alone.

The Energy and Environmental Challenge

This exponential growth in AI also comes with significant environmental implications. AI models consume enormous amounts of electricity, primarily for training and powering data centers. Data centers could account for 20% of global electricity use by 2030-2035, straining power grids.In the U.S. alone, power consumption by data centers is on track to account for almost half of the electricity demand growth by 2030.

Beyond electricity, AI’s environmental footprint includes:

- Water Consumption: Advanced cooling systems in AI data centers require substantial water.

- E-waste: The short lifespan of GPUs and other high-performance computing components leads to a growing problem of electronic waste.

- Natural Resource Depletion: Manufacturing these components requires rare earth minerals.

The industry is exploring solutions like more energy-efficient hardware, smarter model training methods, and using AI itself to optimize energy use and grid maintenance. However, the demand continues to surge, with training a single leading AI model potentially requiring over 4 gigawatts of power by 2030.

For more insights into energy efficiency challenges, you can refer to reports from organizations like the International Energy Agency.

Impact on Industries and Society

The AI revolution has far-reaching consequences across various sectors and for society as a whole. AI is expected to contribute approximately US$15.7 trillion to global GDP by 2030, largely due to increased productivity and consumption.

Industries leveraging AI include:

- Healthcare: AI accelerates diagnoses and enables earlier, potentially life-saving treatments.

- Finance: Improved decision-making and fraud detection.

- Manufacturing: Increased automation and efficiency.

- Software Development: Advanced code generation, system architecture, and debugging. [5]

However, alongside these benefits, significant ethical and societal challenges persist. These include concerns about data privacy and security, as AI systems process vast amounts of personal and sensitive data. [18, 29] Algorithmic bias, inherited from training data, can lead to unfair or discriminatory outcomes in critical areas like hiring or lending.

The future of work is also a key consideration, with AI impacting nearly 40% of global employment. While some jobs may be displaced, new jobs and categories are expected to emerge, requiring upskilling and reskilling of the workforce.

Addressing these ethical implications—including transparency in decision-making, accountability, and the potential for misuse in areas like misinformation or cyberattacks—is crucial for responsible AI development.

For a deeper dive into responsible AI development, explore resources from organizations dedicated to AI ethics, such as those found on The World Economic Forum.

Conclusion

The AI revolution, fueled by self-learning models and powerful new iterations like GPT-5, is accelerating at an unprecedented rate. This advancement is profoundly transforming industries, enhancing productivity, and creating new possibilities. However, it also demands an enormous global infrastructure, leading to fierce competition and significant environmental challenges. Navigating the ethical complexities of bias, privacy, and societal impact will be paramount. As AI continues to evolve, a balanced approach that prioritizes responsible innovation, sustainable growth, and human-centric development will be essential to harness its full potential for the benefit of all.

Frequently Asked Questions (FAQ)

What is self-learning AI?

Self-learning AI refers to systems that can automatically refine their own algorithms and behaviors through continuous interaction with data and environments, requiring minimal manual retraining or reprogramming. They learn from experience and adapt in real time.

What are the key capabilities of GPT-5?

GPT-5, released on August 7, 2025, offers enhanced capabilities in multimodal integration (processing text, images, audio, video), advanced reasoning, improved coding, reduced hallucinations, and personalization features. It unifies the strengths of previous models into a single, powerful system.

Why is AI infrastructure so important?

AI infrastructure, comprising high-performance computing hardware (like GPUs) and vast data centers, is crucial because AI models, especially large language models, require immense computational resources for training and deployment. Without robust infrastructure, the advancements in AI would be severely limited.

What are the environmental concerns related to AI?

The primary environmental concerns include the massive electricity consumption of AI data centers, significant water usage for cooling, and the growing problem of electronic waste from obsolete hardware. The manufacturing of AI components also depletes natural resources.

How does AI impact jobs and the economy?

AI is expected to significantly boost global GDP through increased productivity. While some jobs may be automated, AI is also predicted to create new jobs and categories, requiring a global workforce to adapt and upskill. It can also exacerbate inequality if not managed properly.

What ethical challenges does AI pose? Key ethical challenges include ensuring transparency in AI decision-making, mitigating algorithmic bias present in training data, safeguarding data privacy and security, addressing potential job displacement, and preventing misuse for disinformation or cyberattacks.